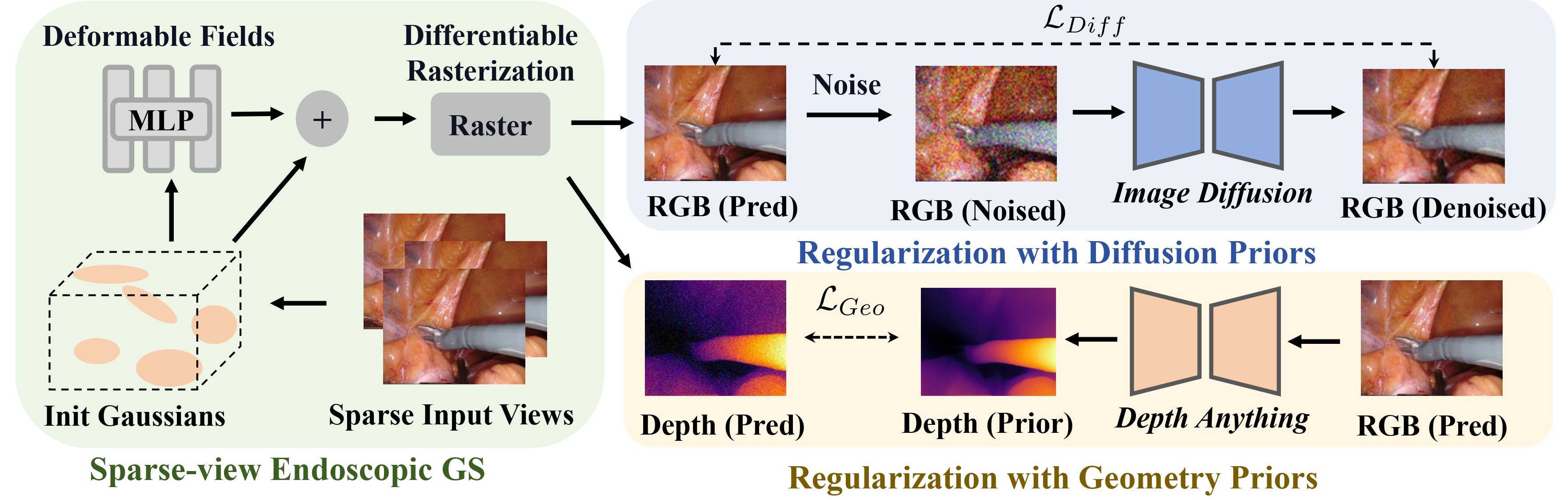

Network

Experimental Results

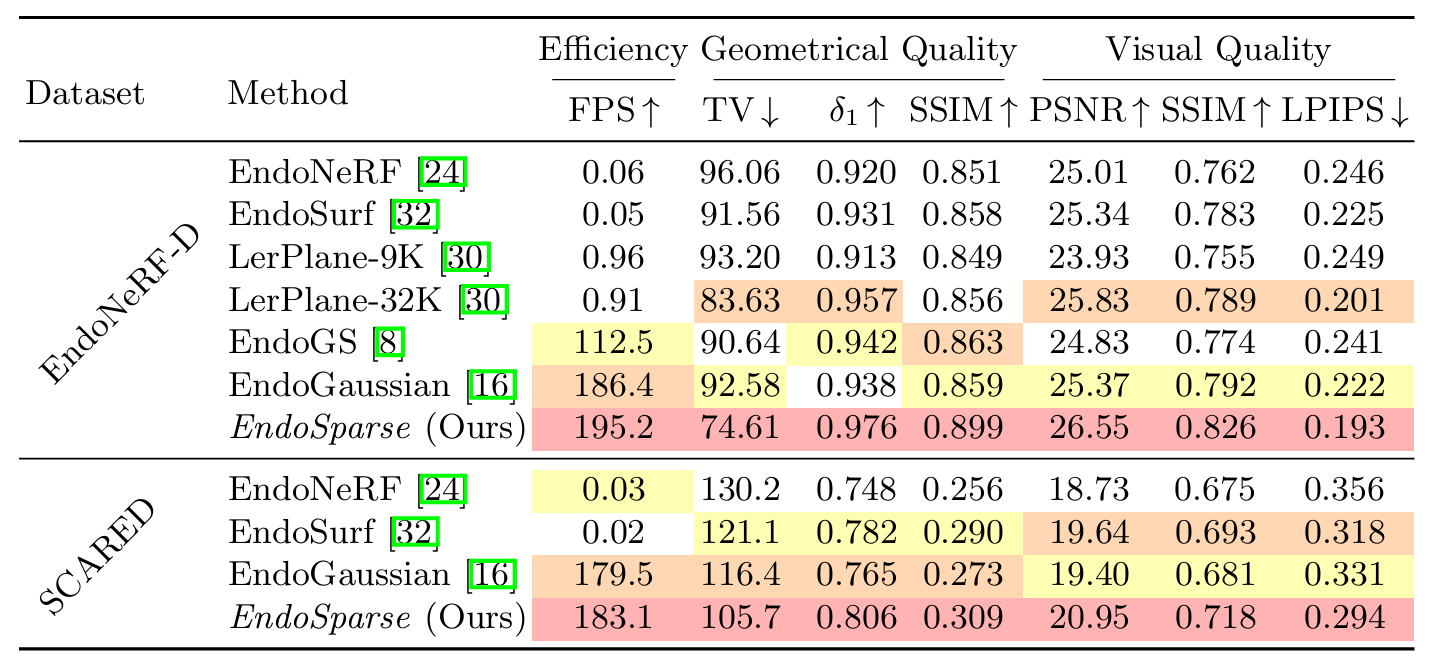

Quantitative Results

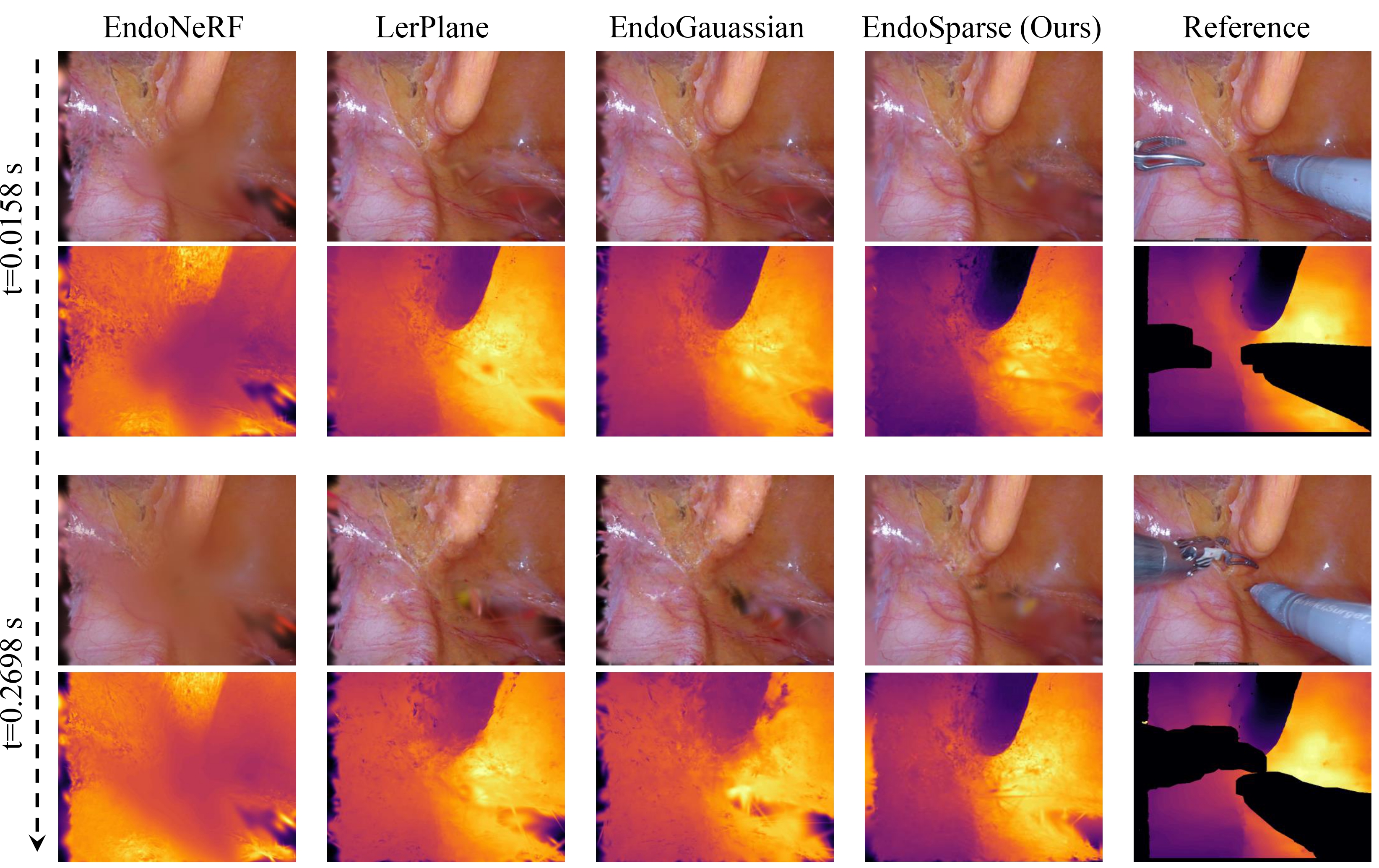

Qualitative Results

Citation

@article{li2024endosparse,

author = {Chenxin Li and Brandon Y. Feng and Yifan Liu and Hengyu Liu and Cheng Wang and Weihao Yu and Yixuan Yuan},

title = {EndoSparse: Real-Time Sparse View Synthesis of Endoscopic Scenes using Gaussian Splatting},

journal = {arXiv preprint},

year = {2024}

}

Relevant Works

EndoGaussian: Real-time Gaussian Splatting for Dynamic Endoscopic Scene Reconstruction

An intial exploration into real-time surgincal scene reconstruction built on 3D Gaussian Splatting.

LGS: A Light-weight 4D Gaussian Splatting for Efficient Surgical Scene Reconstruction

An initial investigation into memory-efficient surgical scene reconstruction achieves over 9X compression while preserving high visual quality and efficiency.

Endora: Video Generation Models as Endoscopy Simulators

A pioneering exploration into high-fidelity medical video generation on endoscopy scenes.

U-KAN Makes Strong Backbone for Medical Image Segmentation and Generation

An innovative enhancement of U-Net for medical image tasks using Kolmogorov-Arnold Network (KAN).